Insights

Haziqa Sajid

Oct 31, 2024

Explore how AI hallucinations impact business operations and reputation, and learn how observability tools ensure accuracy, trust, and transparency in AI outputs.

Did you know that AI-generated content can sometimes be confidently incorrect? Experts call this an AI hallucination. Research shows AI models can hallucinate anywhere from 3% to 91%. As organizations increase their reliance on AI, these hallucinations can disrupt operations and even damage reputations, especially in sectors where trust and accuracy are critical.

When left unchecked, AI hallucinations can “snowball,” where one initial error cascades into a series of false information, amplifying the risks of large-scale misinformation. This is where AI observability can help businesses monitor and manage AI systems in real time.

Combining AI observability with strong governance and validation processes empowers businesses to maximize the benefits of AI while minimizing its risks. Let’s explore the major risks businesses face because of AI hallucinations and how proactive AI oversight can help.

Why Does AI Hallucinate?

AI hallucinations stem from specific design factors in how AI models learn and make predictions. Here are the most common causes:

1. The Role of the Loss Function

A key factor is the loss function, which guides AI models by adjusting their confidence in predictions rather than focusing solely on accuracy. As AI expert Andriy Burkov explains, the ‘loss function’ applies small penalties to incorrect predictions but doesn’t prioritize factual correctness. This makes “truth a gradient rather than an absolute,” meaning AI is optimized for confidence rather than strict accuracy.

2. Lack of Internal Certainty

AI models also lack an internal sense of certainty. Burkov further states that these models don’t know “what they know.” Instead, they treat all data points with equal confidence. This equal treatment means that AI responds to all queries with the same confidence. As a result, it often provides confidently incorrect answers, even on topics it knows little about.

3. Statistical Patterns and Probability

AI models can hallucinate regardless of data quality. Since these models don’t verify truth but generate responses based on statistical patterns, AI hallucinations become an unavoidable byproduct of its probability-driven design.

Additionally, the architecture of LLMs relies heavily on contextual associations to create coherent answers, which is helpful for general topics. However, models may produce invented details when data is limited or the context is unclear. One LLM hallucination study suggests that while calibration tools like knowledge graphs improve accuracy, hallucinations remain due to limitations in the model’s design.

4. Balancing Creativity and Accuracy

Many models are designed to offer engaging, varied responses. While AI’s tendency for creativity can be appealing, it also leads to factual inaccuracy. According to one arXiv research, AI’s push for novelty increases the likelihood of hallucinations.

The Impact of AI Hallucinations on Business Operations

When AI generates plausible but false information, it can disrupt business functions, often resulting in costly or even harmful outcomes. Now, let’s examine the risks of AI hallucinations across different sectors and explore practical strategies for reducing their impact.

1. Customer Service

AI hallucinations in customer service can damage brand trust by generating erroneous responses. Imagine an AI chatbot providing incorrect product details or policies. It might tell a customer a product has a feature it doesn’t, or give the wrong return policy. Misinformation like this can frustrate customers and erode their trust in your brand.

Therefore, It’s important to implement human handovers and accuracy monitoring in AI-driven customer service. Experts recommend fail-safes like automatic escalation to human representatives when the AI generates uncertain responses.

2. Finance

AI hallucinations in finance could lead to incorrect stock predictions or flawed credit assessments. For example, an AI may incorrectly label a risky investment as “safe” or downgrade a credit score unjustly. In finance, such errors not only harm clients but can also lead to compliance violations and financial losses.

This is why you must treat AI insights as a “second opinion” rather than the final say. Implement AI as a support tool for financial advisors, allowing experts to verify AI-generated insights with human judgment for balanced decisions.

3. Healthcare

AI hallucinations in healthcare can lead to incorrect diagnoses or inappropriate treatment suggestions. For instance, an AI might misinterpret patient symptoms, endanger patient safety and expose healthcare providers to liability. To extensively use AI in healthcare, you must establish protocols for clinicians to review all AI-driven recommendations. Likewise, tools that verify the accuracy of AI recommendations through knowledge graphs can also help.

4. Supply Chain Management

AI hallucinations in demand forecasting can lead to supply chain issues like overstocking or stockouts, impacting revenue. For example, AI might inaccurately predict a high demand, leading to excess inventory, or underpredict and cause missed sales.

Supply chain operations depend on precise forecasting, so AI errors can disrupt logistics and inventory management. You can combine AI-driven forecasts with historical data and observability tools to adjust AI predictions with input from supply chain managers.

5. Drug Discovery

In drug discovery, AI hallucinations can mislead research, potentially identifying ineffective compounds as promising, which wastes time and resources. Given the high cost of drug development, such errors can be financially significant.

Therefore, you must use a rigorous multi-step validation process, where AI predictions undergo verification through peer-reviewed scientific methods. AI should support initial screening, followed by experimental and clinical testing to ensure that research is accurate and evidence-based.

6. AI Agents in Automation Workflows

Using AI agents in business workflows brings efficiency but also carries inherent risks. AI, by nature, can deliver responses with high confidence, even when its answers are incorrect. This tendency can disrupt workflows and lead to significant consequences.

For example, if an AI agent misinterprets or inaccurately conveys a company policy, it can mislead employees, confuse customers, and create operational setbacks. Such misinformation isn’t just an inconvenience; it can escalate quickly into financial losses, reputational harm, and even legal issues.

A recent incident with Air Canada demonstrates this well. The airline’s chatbot provided incorrect ticket information to a passenger, leading to misunderstandings and forcing the company to issue a refund. Errors like these not only result in financial loss but also erode public trust in the organization’s services. Implement a “human-in-the-loop” model where critical automation steps undergo human review. Human oversight in compliance-sensitive workflows can improve reliability and prevent such costly errors.

Reputational Harm from AI Hallucinations

As AI technology becomes more integrated into business processes, the risk of AI hallucinations can harm organizational reputation. AI-generated errors can cause public mistrust, particularly when audiences cannot easily distinguish AI-generated content from human-created information.

This confusion grows when AI delivers misleading but seemingly credible details, making it hard for users to verify accuracy. One Association for Computing Machinery (ACM) research shows that unchecked AI misinformation can directly damage organizational credibility.

Legal implications add another layer of complexity to managing AI hallucinations. If an AI system inadvertently generates defamatory or misleading content, it can expose the organization to both reputational harm and legal liability. This becomes especially concerning when AI makes false claims about individuals or other companies, as current legal frameworks struggle to hold AI accountable for such outputs.

Legal experts emphasize the importance of monitoring AI content to prevent reputational harm and avoid complex liability issues that can arise from AI-driven defamation. Beyond legal risks, brands face an elevated risk of damage from false narratives as AI-driven misinformation becomes increasingly convincing.

Real-Life Examples of AI Hallucinations

AI hallucinations present real risks across many domains. If unchecked, they can damage reputations, trigger legal issues, and erode public trust. Here’s how each type of hallucination can impact your business and why you should stay vigilant:

1. Legal Document Fabrication

AI can sometimes "invent" legal cases that sound real but have no factual basis. This was the case for an attorney who used AI to draft a motion, only to find the model had fabricated cases entirely. The attorney faced fines and sanctions for relying on this fictitious information.

If you work with AI in legal settings, treat it like an intern with potential but no law degree. Verify every case and citation it generates. Triple-check every output for accuracy before it goes anywhere near a courtroom.

2. Misinformation About Individuals

In 2023, ChatGPT wrongly accused a law professor of harassing his students. Likewise, an AI model wrongly declared an Australian mayor, who had been an active whistleblower, the culprit of a bribery scandal. This kind of misinformation can quickly go viral on social media, damaging both personal and professional reputations.

This is why you must always double-check the facts if AI-generated content mentions specific people. Implement strict guidelines to prevent AI from creating content on sensitive topics without human verification.

3. Invented Historical Records

AI models can also fabricate historical events that never happened. For example, when ChatGPT was asked, 'What is the world record for crossing the English Channel entirely on foot?' it produced a nonsensical response, claiming, 'The world record for crossing the English Channel entirely on foot is held by Christof Wandratsch of Germany. While these fabrications can seem harmless, they could mislead employees, students, or customers, creating a cycle of misinformation. If you plan to use AI in your business, it’s important to use trusted sources and cross-reference details before publishing.

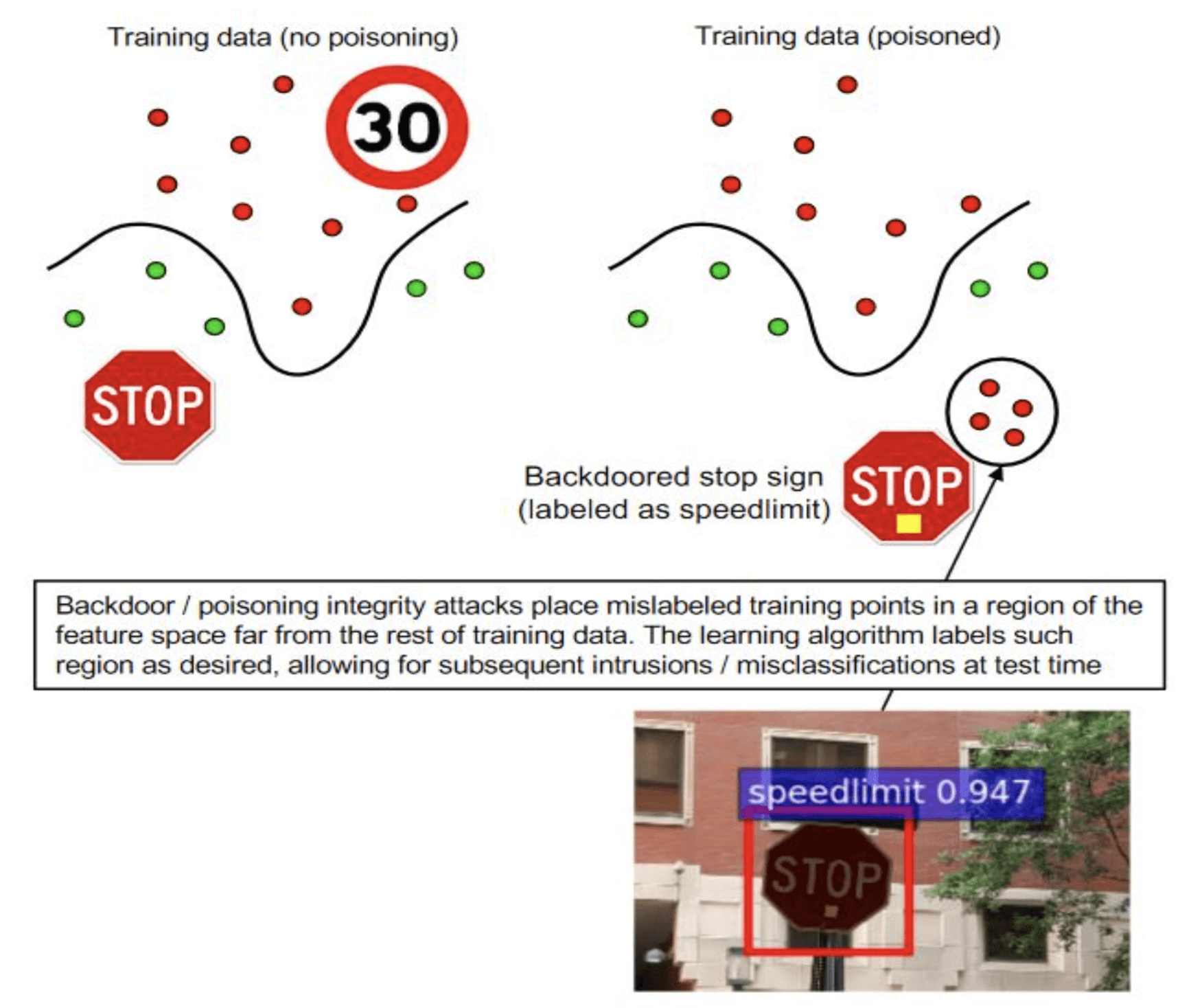

4. Adversarial Attacks

Adversarial attacks trick AI into producing incorrect or dangerous outputs. One study found that adversarial examples led deep neural networks (DNNs) to misclassify malware in over 84% of cases.

For instance, adding small stickers or patches to a "Stop" sign could cause a self-driving car's AI to misclassify it as a "speed limit” sign. This subtle manipulation is disregarded by the human eye but can deceive the AI into ignoring critical stop commands.

The potential for error is enormous, especially in fields requiring high security, such as banking, biometrics, and autonomous driving. If you work in AI-dependent fields, invest in defensive measures. Check vulnerabilities using encryption, AI observability, audits, and regular security assessments.

How Can AI Observability Help?

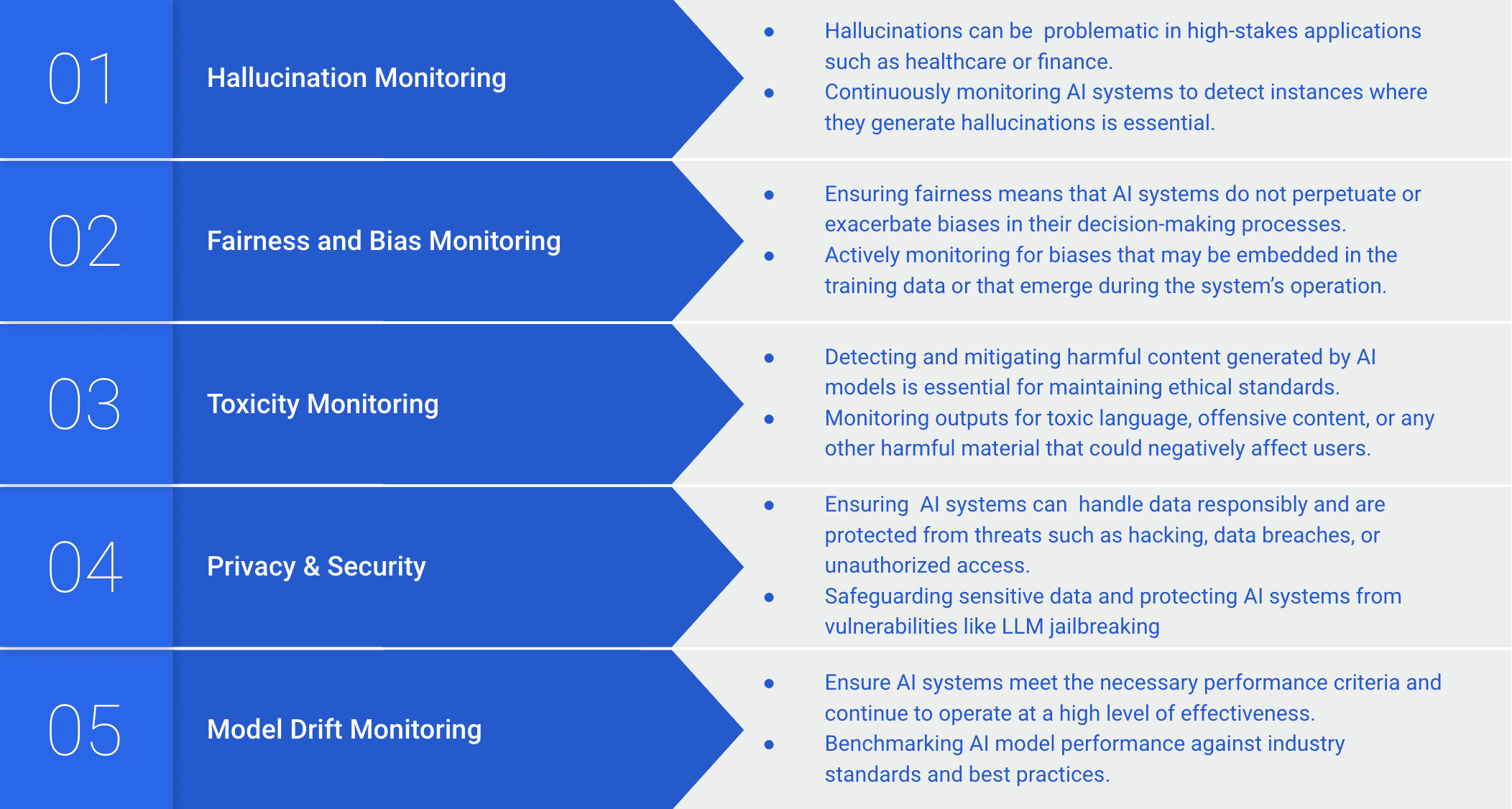

Proactive steps like AI observability allow you to catch AI errors before they reach your users. Observability involves real-time monitoring and validation of AI outputs, which means your team can detect hallucinations early and prevent misinformation from spreading. One arXiv study shows that real-time validation frameworks make AI more reliable, giving you better oversight and accountability. Here are the various ways AI observability helps your business:

Early Detection of Errors: With real-time observability, you can catch AI errors before they impact your customers, enabling immediate correction. This proactive detection minimizes the risk of misinformation spreading—a critical feature for customer-facing applications. Methods like passage-level self-checks quickly and reliably spot inaccuracies, allowing errors to be addressed right at the source.

Boosting Transparency and Building Trust: Observability goes beyond error detection; it enhances transparency into how AI makes decisions, which builds trust with users and stakeholders. Continuous validation of AI content, especially in complex, multi-step tasks, prevents “snowballing” errors where one mistake leads to others. This constant adjustment helps maintain consistent, accurate results, crucial for credibility in customer interactions and public-facing information.

Real-Time Validation for High-Stakes Applications: Real-time observability frameworks monitor and validate AI-generated information on the fly, minimizing errors before they escalate. For instance, Pythia's knowledge graph benchmark enhances LLM accuracy to 98.8% by actively validating claims as they are generated. This proactive approach to observability is invaluable in fields like healthcare and finance, where accuracy is non-negotiable.

While AI brings transformative potential to your business, it also introduces challenges, such as hallucinations, inaccuracies, and reputational risks. When AI confidently provides incorrect information, it can mislead teams, impact decision-making, and reduce trust among clients and stakeholders. These errors can result in financial losses and harm your brand’s credibility.

To address these risks, observability practices are essential. Real-time observability allows you to catch and correct hallucinations instantly. Tools like Real-Time Hallucination Detection and Knowledge Graph Integration provide immediate fact-checking and context alignment, ensuring AI-generated insights are based on verified data.

AI observability also supports adaptability through continuous improvement. Task-specific accuracy checks monitor performance, while custom dataset integration aligns outputs with your industry standards. Importantly, embedding observability helps ensure that AI supports your goals with precision and reliability.

If you're ready to elevate the reliability of your AI models, contact us at Pythia AI today. Discover how Pythia’s observability platform can help your organization achieve excellence in AI monitoring, accuracy, and transparency.