Insights

Haziqa Sajid

Nov 11, 2024

As a compliance officer, you’ve likely heard that AI can transform your organization’s efficiency. And it’s true—when used thoughtfully, AI can streamline operations and allow your team to do more with fewer resources. In fact, IBM research shows that about 42% of organizations are already integrating AI into their processes.

However, AI also brings unique risks that demand diligent monitoring and governance. As AI becomes essential to business operations, it must remain transparent, fair, and compliant with emerging regulations. So, how do you keep AI within these boundaries?

This is where AI observability platforms like Pythia come in. With continuous visibility into AI systems, Pythia helps organizations meet current regulatory demands and stay prepared for future requirements. In this guide, we’ll explore how Pythia can help your organization navigate the complex landscape of AI compliance.

What are the Major AI Compliance and Governance Standards?

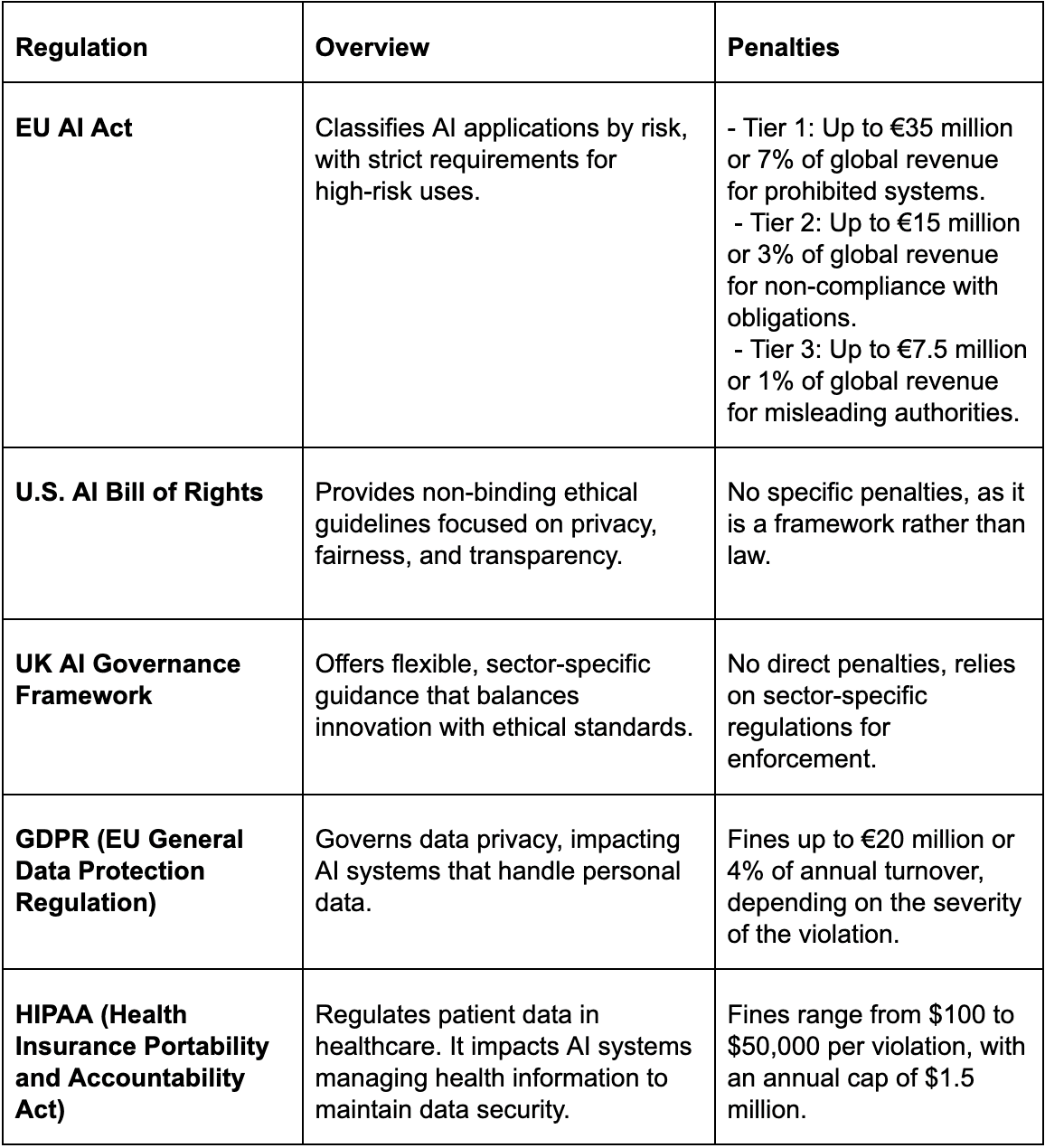

At the heart of AI governance are four golden rules: safety, transparency, accountability, and fairness. These principles lay the foundation for trustworthy AI. Key regulations, like the EU AI Act, the U.S. AI Bill of Rights, and the UK’s AI Governance Framework, focus on these core principles to keep users protected and promote ethical AI practices across industries.

Let’s take a closer look at these principles.

Safety

As AI advances into critical sectors like healthcare and automobiles, safety becomes a non-negotiable standard. Imagine an autonomous vehicle maneuvering through a crowded city intersection. A single error here isn’t just a minor glitch but a potential threat to public safety.

Whether your focus is healthcare, transportation, or consumer applications, your AI must be equipped to handle the unexpected with precision. Research highlights this need; studies from Emerald Insights show that even advanced AI systems still face challenges in unpredictable real-world environments.

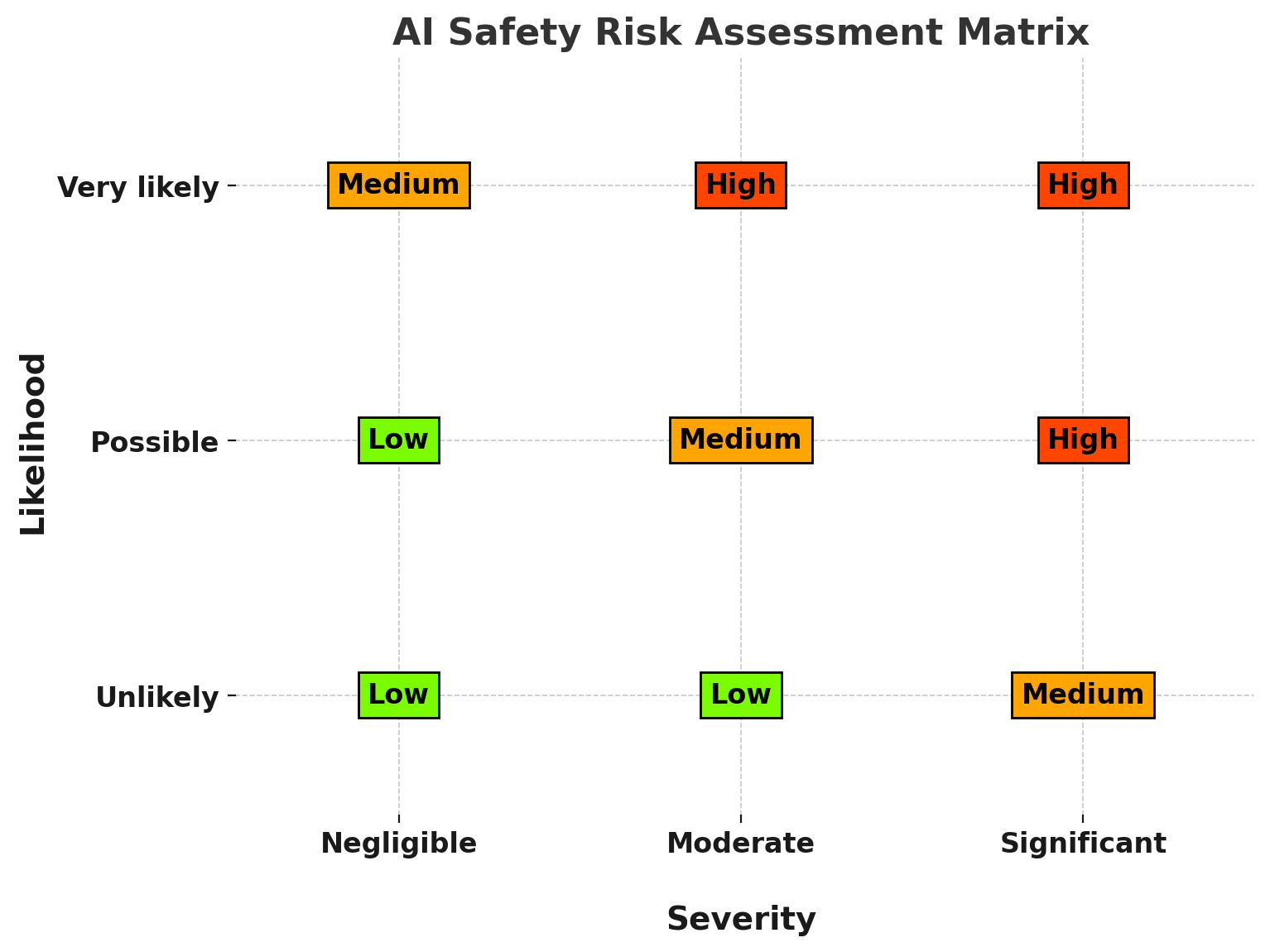

To align with evolving AI regulations, you need to consider conducting a thorough risk assessment. It can help you evaluate the potential risks based on severity and likelihood.

Severity: Ranges from “Negligible” to “Significant,” indicating the potential impact of a risk.

Likelihood: Assesses how often a specific risk might arise.

Understanding these dimensions enables you to build safer, more reliable AI systems prepared for real-world complexity.

Transparency

Transparency is essential to AI compliance and user trust. The EU AI Act prioritizes this by mandating clear, actionable guidelines for transparency in high-risk AI systems. As a compliance officer, your role is to ensure users and deployers understand how AI systems function, what data they process, and the reasoning behind their decisions. This clarity builds confidence in AI and empowers users to make informed choices.

For general-purpose AI, transparency obligations go further. The Act requires clear labels on AI-generated content, such as “deep fake” images and videos. Users must easily identify AI content to maintain trust in the digital environment.

GDPR principles align closely with these transparency requirements. Recital 60 emphasizes clarity in how personal data is used, especially for AI-assisted decisions. Providing clear explanations when using data to train AI reinforces user trust and ensures fair processing.

As you implement these guidelines, focus on clarity. Give users the information they need to understand AI decisions that impact them. This approach supports the EU AI Act’s mission to create a trustworthy AI ecosystem and push your organization towards ethical AI compliance.

Accountability

In high-stakes areas like finance, healthcare, and customer analytics, the impact of AI decisions demands a clear line of responsibility. Incorporating accountability in AI ensures that every AI-assisted decision is traceable and defensible.

Therefore, your AI system needs to be compliant with principles like data minimization, accuracy, and transparency. You must document how your AI system arrives at specific decisions and provide clear explanations to individuals impacted by AI-driven outcomes. When a customer or user questions an AI decision, there should be a well-defined path to review and explain the reasoning behind it.

Fairness

Fairness in AI means treating all users equitably, regardless of their background or demographics. This principle is essential, especially when AI systems influence important personal outcomes like hiring, financial approvals, or product recommendations.

As a compliance officer, your role is to ensure these AI systems prevent discrimination from the start. The EU’s AI Act aligns with this goal by requiring companies to integrate non-discrimination directly into the design phase. However, AI’s complexity makes bias detection and proof challenging.

Studies reveal that without strict fairness protocols, AI can inadvertently embed and even amplify social biases. Therefore, AI systems require your strategic oversight to ensure fairness is applied in practical, real-world settings.

AI Regulations and Penalties for Non-Compliance

Challenges in Meeting AI Compliance Requirements

Today’s AI regulations set strict standards, especially for high-risk applications, focusing on transparency and accountability. Meeting these standards requires robust monitoring tools that exceed traditional compliance checks.

Hallucination and Bias Risks

Ensuring compliance in AI is complex, especially in high-stakes fields where hallucination and bias present significant risks. AI hallucinations not only violate compliance standards but also compromise user safety. Med-HALT (Medical Domain Hallucination Test) benchmarks reveal that even advanced models are prone to producing hallucinations.

Bias is an equally pressing concern. Gender biases in AI, for instance, have been documented across multiple studies. A 2023 UCLA study found that ChatGPT and Stanford’s Alpaca model used descriptors like “expert” and “integrity” for male candidates, but “beauty” and “delight” for female candidates in recommendation letters.

Data Privacy and Security Concerns

HIPAA and GDPR’s strict data protection standards set a high bar for industries like healthcare and finance, where large datasets—particularly those involving sensitive data—can increase the risk of data breaches and privacy violations. As the volume of data grows, these issues need to be carefully addressed, as research from MDPI highlights.

Additionally, GDPR’s “privacy by design” principle requires privacy protections at every stage. For organizations with legacy systems, meeting this standard is complex and costly. On the other hand, AI solutions relying on the cloud also face increased risk. Managing privacy across multiple cloud providers is challenging, as each brings unique security vulnerabilities.

To stay compliant, you must integrate privacy protection in your AI system, invest in secure systems, and remain vigilant about data security at every level.

Model Drift and Performance Consistency

Model drift occurs when shifts in data or networks reduce an AI model’s accuracy over time. This drift is common across sectors and, if unaddressed, impacts compliance and system reliability.

In healthcare, changes in imaging equipment or patient demographics can lower a model’s accuracy, affecting patient outcomes and safety standards. In IT, AIOps systems rely on AI to detect failures. Concept drift here can lead to unreliable predictions and compromised system performance.

Addressing drift requires continuous monitoring, targeted data strategies, and early detection. Regular performance checks ensure that AI models remain accurate and compliant, even as data patterns evolve.

To address these challenges and ensure AI compliance, organizations need a robust solution: an AI observability platform. Let’s see how it helps.

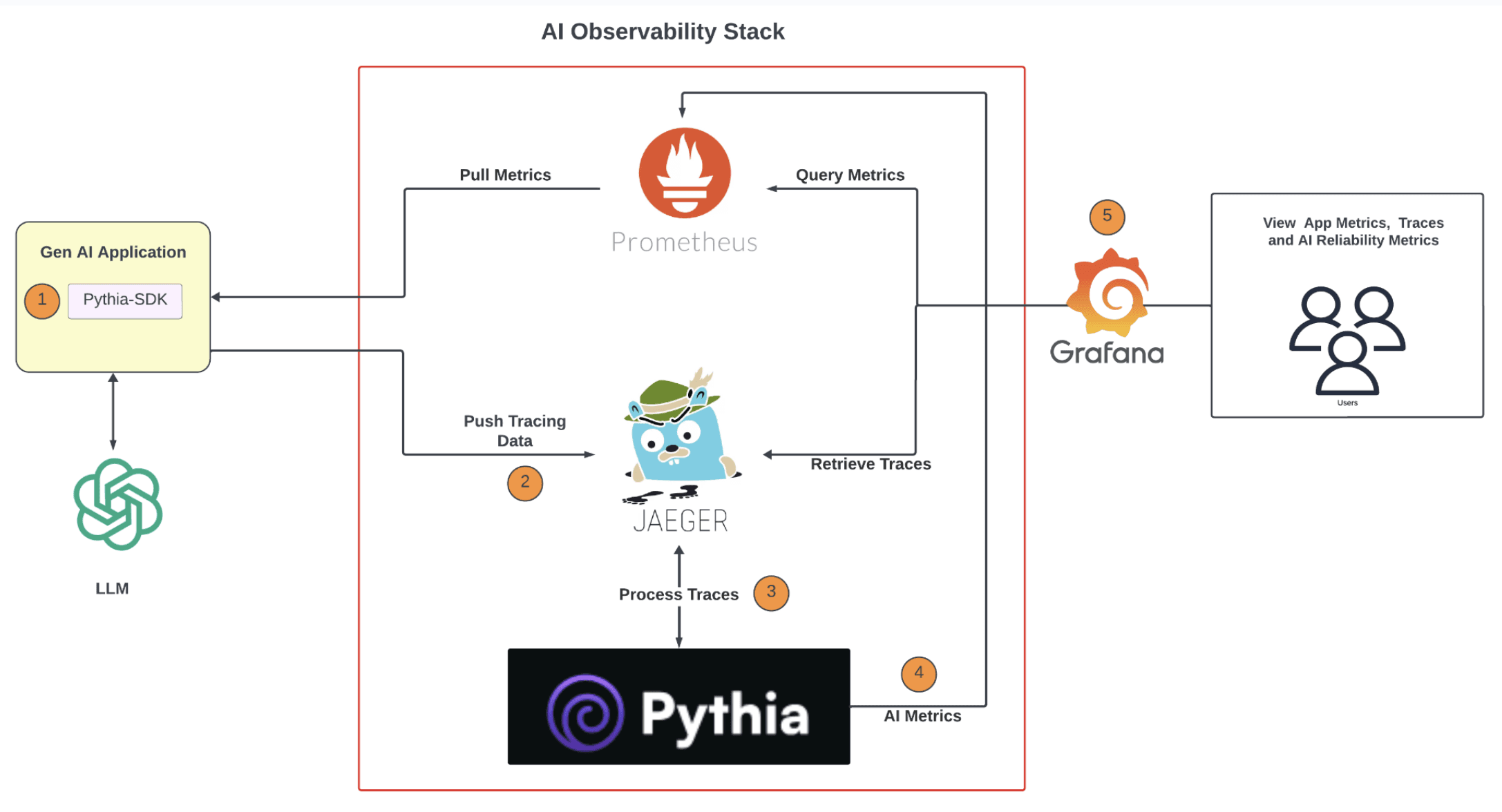

Introducing Pythia: An AI Observability Platform for Compliance and Governance

Pythia is a powerful AI observability platform designed to improve reliability, accuracy, and compliance in AI systems. It offers you all the tools you need to directly address the core challenges of AI governance and uphold ethical and transparent practices.

Equipped with advanced detection and monitoring features, Pythia enables teams to track, analyze, and refine AI model performance in real time.

Bias and Fairness Monitoring

Pythia’s bias and fairness monitoring tools offer real-time insights into the potential biases within AI outputs. Features like input validators actively screen incoming data to ensure that only complete, accurate, and neutral data is used in AI assessments.

For instance, if a candidate profile includes personally identifiable information (PII) or irrelevant attributes like age or ethnicity, Pythia’s system detects and filters out this information. Likewise, Pythia’s output validators work in parallel to scan for inconsistencies, stereotypes, and potential biases.

Combining continuous fairness monitoring with strict input and output validations provides a full-spectrum approach to managing AI bias. This setup minimizes unintended consequences and helps prevent AI models from reinforcing societal biases.

When input or output checks fail, Pythia logs each instance to create a clear audit trail. This allows you to identify and address recurring data issues over time.

Privacy and Security Safeguards

Pythia provides essential tools to keep data protected and compliant with regulations. With advanced input and output validators, Pythia removes sensitive data (such as personally identifiable information) and blocks malicious inputs. This allows you to meet privacy standards of HIPAA and GDPR.

Pythia also secures AI systems from potential vulnerabilities by reinforcing cybersecurity standards. It offers prompt injection prevention to guard against manipulation attempts. Likewise, the “Secrets Present” validator further protects against data breaches by masking sensitive information like API keys. With Pythia, you can ensure AI operates within the bounds of both ethical and regulatory standards.

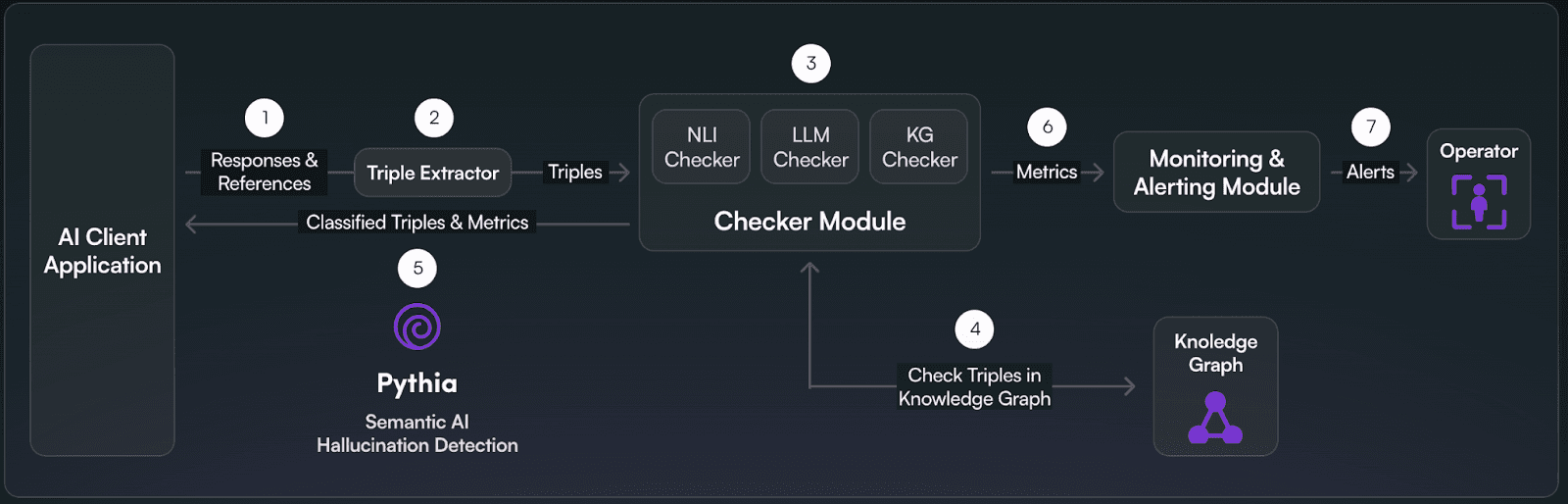

Real-Time Hallucination Detection

Pythia’s real-time hallucination detection acts like a lie detector for your AI. With the support of advanced algorithms, knowledge graphs, and RAG, Pythia ensures every response is grounded in reality.

The platform leverages knowledge triplets that capture the full context of AI responses. Knowledge triplets categorize AI outputs into clear types: entailment, contradiction, neutral, and missing facts.

For instance, an AI system claims turmeric completely cures arthritis in response to a question about turmeric benefits for joint pain. Pythia’s breaks down the AI response into the format of subject-predicate-object, such as "turmeric - cures - arthritis.

Next, Pythia classifies each triplet by comparing it against a vast knowledge graph and reference datasets. Using entailment checks, Pythia categorizes each triplet based on its alignment with known information:

Entailment: If a triplet aligns with verified facts in the knowledge graph, like "Turmeric may help reduce joint pain due to its anti-inflammatory properties," it is marked as "entailment."

Contradiction: If the triplet conflicts with verified facts (for example, "Turmeric does not cure arthritis but may provide symptom relief"), it is flagged as a "contradiction."

Neutral: If the triplet neither confirms nor denies the established facts, as with mixed research results, it is classified as "neutral."

Missing Facts: If essential supporting information is missing (such as, “Clinical trials show symptom improvement but not a cure”), it is flagged for missing facts.

In this example, the AI’s statement that turmeric can "completely cure arthritis" contradicts verified data in the knowledge graph. Pythia detects this as a hallucination in real time and asks operators to review or correct the response. With this feedback integrated into the AI system, you can instantly modify the response to be accurate. For instance, it might add a clarification like, "While turmeric may reduce symptoms, it does not cure arthritis."

Customizable Alerting System

Pythia’s customizable alerting system lets you take full control of AI observability. It sets real-time alerts for specific concerns like model drift and accuracy drops. When a threshold is crossed, Pythia sends immediate notifications to stakeholders through email, SMS, or enterprise messaging, enabling quick action.

Pythia also supports integration with knowledge graphs and custom datasets to align AI responses with industry standards. You can incorporate proprietary datasets (such as drug research or financial data) to validate responses and meet compliance requirements based on your requirements. This customization ensures AI systems operate within the operational standards of your industry.

Custom datasets offer even more specialized support. In pharmaceuticals, for example, integrating proprietary research enables AI to align its recommendations with FDA guidelines.

How Pythia Strengthens AI Compliance

With evolving regulations, companies now face immense pressure to ensure that their AI systems are reliable, fair, and secure. Pythia’s observability features are designed to help organizations meet these standards with confidence.

Pythia offers you real-time monitoring for AI accuracy, fairness, privacy, and security. From spotting hallucinations to removing sensitive data, Pythia helps you uphold ethical standards and stay within regulatory boundaries. Additionally, features like custom dataset integration and thorough audit logging allow you to trace AI based on your specific industry requirements.

Pythia allows you to address biases, maintain performance, and ensure accountability in every AI-driven decision. Let’s explore how Pythia’s powerful features can future-proof your AI operations:

Detect Hallucinations Instantly: Keep AI reliable and trustworthy by flagging detection inaccuracies in real time.

Use Knowledge Graphs for Context: Enable smarter, compliant decisions by grounding AI outputs in factual data.

Track Accuracy and Fairness: Ensure ethical and precise responses using custom accuracy metrics to monitor bias and relevance.

Detect Issues Instantly: Rely on real-time alerts to address model drift and data shifts before they escalate.

Protect Sensitive Data: Leverage Pythia’s PII detection and secrets masking to secure information and prevent vulnerabilities.

Are you ready to embed trust and compliance into every AI decision? Contact us to learn how Pythia can help your AI systems operate at peak performance while setting a new standard for reliable, ethical AI.