How To

Haziqa Sajid

May 16, 2024

Amazon Web Services (AWS) offers a fully managed AWS Bedrock that streamlines generative AI application development. AWS Bedrock offers developers pre-trained generative AI foundation models and customization tools. The foundation models are the building blocks in generative AI applications, and customization tools allow customizing these models according to specific use cases.

Wisecube’s Pythia further boosts the performance of generative AI applications with continuous hallucination monitoring and analysis. Real-time AI hallucination detection directs developers toward continuously improving LLMs, resulting in reliable outputs.

In this guide, we’ll integrate Wisecube Pythia with AWS Bedrock using the Wisecube Python SDK.

Getting an API key

To authenticate Wisecube Pythia, you need a unique API key. To get your unique API key, click the lock icon in the left sidebar of your Pythia dashboard and copy your API key.

Installing Wisecube

You must install the Wisecube Python SDK in your Python environment to detect AI hallucinations with Pythia. Copy the following command in your Python console and run the code to install Wisecube:

To learn more about the Wisecube SDK, visit the Wisecube page on PyPI.

Installing AWS, LangChain, and Vector Database

Developing an LLM with AWS Bedrock requires installing AWS, LangChain, and a vector database. The code snippet below installs the following libraries:

awscli: Facilitates interaction with AWS from the terminal.

boto3: Provides an interface to interact with Amazon Web Services.

langchain: Allows to create Natural Language Processing (NLP) pipelines.

faiss-cpu: Implements the CPU-only version of the Faiss database.

Authenticating API key

You need to authenticate the Wisecube API key to interact with Pythia. Copy and run the following command to authenticate your API key:

Developing LLM with AWS Bedrock

Developing an LLM with AWS Bedrock goes through the following steps:

Import Required Libraries

The following libraries are required to build an NLP pipeline and interact with AWS. Copy and run the code snippet below to import these libraries:

Create a Bedrock Client

Next, you need to create a Bedrock client to use the service finally. service_name specifies that we’re using bedrock_runtime service, and region_name sets the region to us-east-1, which can differfor your configuration. Lastly, we define modelId, accept, and contentType variables to specify your pre-trained model and set the data to JSON format. We’re using the Amazon Titan Express model here.

Build an LLM and Generate Response

Now, you can build your LLM using the model specified above. This begins with building the request body, which includes the inputText that specifies the user query and textGenerationConfig. This defines the configuration for the text generation process.

The following code converts the body into a JSON object and uses bedrock.invoke_model method to send the user query to the AI model. Lastly, it extracts the raw content from the response using response.get(“body”).read() and converts the raw content to JSON format using json.loads.

After we get an LLM response, response_body['results'][0]['outputText'] extracts only the string part from the response because Pythia accepts arguments in string format.

Using Pythia to Detect Hallucinations

Now that you’ve got an LLM that generates responses based on user queries, you can integrate it with Pythia to detect real-time hallucinations. To do this, you need to store data in a vector database, which will act as a reference in Pythia for fact verification. This can be achieved in two simple steps:

Use Retrieved Data as Reference

The following code snippet loads diabetes.csv data, generates vector embeddings for data, and creates two functions, get_index() and get_similarity_search_results(). The get_index() function returns an in-memory vector database to be used in the application.

The get_similarity_search_results() function retrieves similar data points from the vector database based on the input vector. Lastly, it flattens the retrieved similar data points and returns them.

Use Pythia To Detect Hallucinations

Now, we can use Pythia to detect real-time hallucinations in LLM responses using the references retrieved in the previous step. To do this, we define our question, which is the same as the question we passed in the body object above. Then, we create a vector index using the get_index() function and retrieve the reference with get_similarity_search_results function. Don’t forget to extract the string portion from the reference like we did for the response above.

Lastly, the client.ask_pythia detects hallucinations based on reference, response, and question provided to it. Note that our response is passed as response_text in the following code because our LLM responses are stored in the response_text variable.

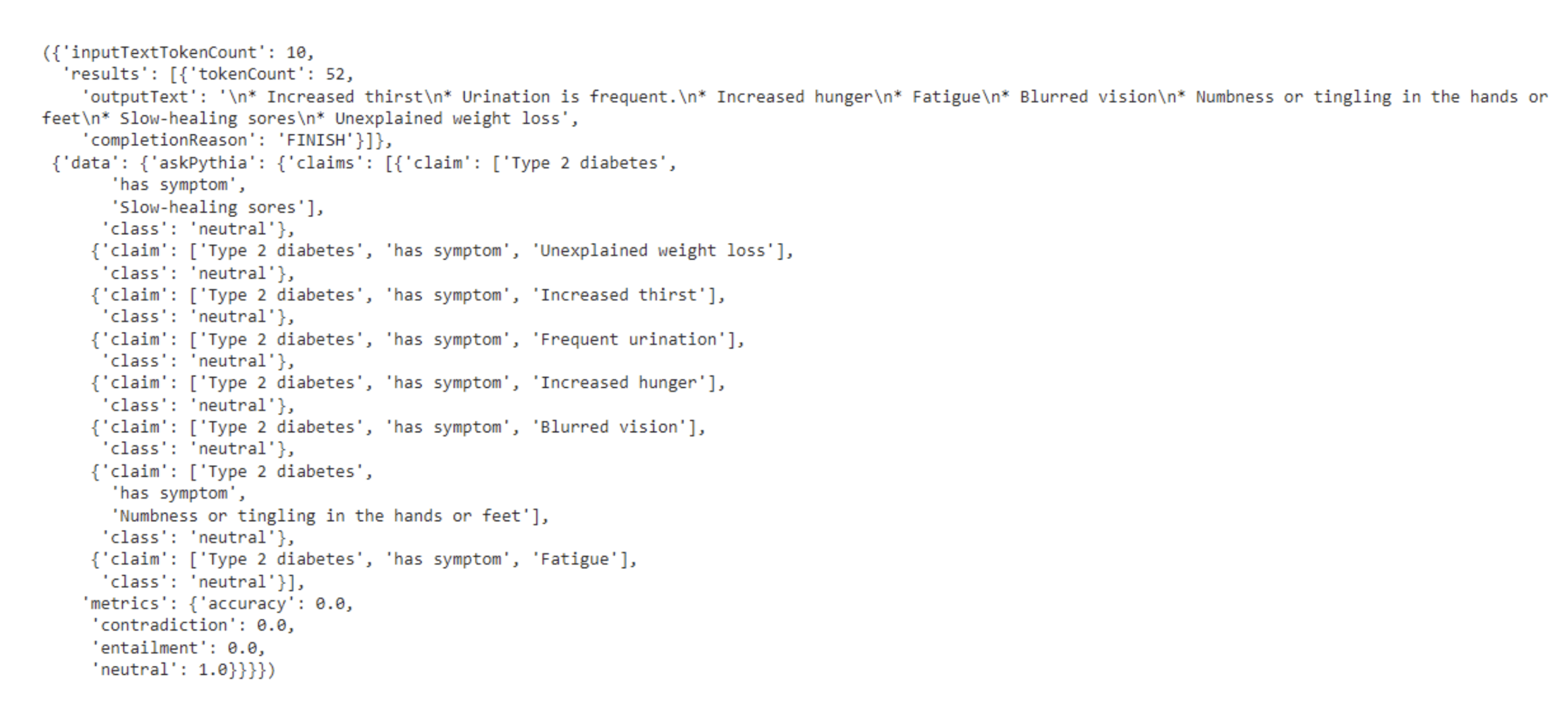

The final output for our query is in the screenshot below, where SDK Response categorizes LLM claims into relevant classes, including entailment, contradiction, neutral, and missing facts. Finally, it highlights the LLM's overall performance with the percentage contribution of each class in the metrics dictionary.

Full Code

The steps we discussed are laid out in a procedural approach to make it easier to understand. However, compiling logic into functions is recommended in Python applications to make the code reusable, clean, and maintainable. Therefore, we compile the logic to develop an LLM with AWS Bedrock and use Pythia to detect AI hallucinations into reusable functions:

Benefits of Using Pythia with AWS Bedrock

Pythia offers a range of benefits when integrated into your workflows. These benefits allow LLM developers to continually improve their systems while tracking performance with the help of Pythia. The benefits of integrating Pythia with AWS Bedrock include:

Advanced Hallucination Detection

Pythia uses a billion-scale knowledge graph with 10 billion biomedical facts and 30 million biomedical articles to verify LLM claims and detect hallucinations. Pythia extracts claims from LLM responses in the form of knowledge triplets and verifies them against the billion-scale knowledge graph. Together, these increase the contextual understanding and reliability of LLMs.

Real-time Monitoring

Pythia continuously monitors LLM responses against relevant references and generates an audit report. This allows developers to address risks and fix hallucinations as soon as they occur.

Robust LLMs

Real-time hallucination detection against a vast range of information promises the development of robust LLMs. These LLMs generate reliable outputs, resulting in disruptive biomedical research.

Enhanced Trust

Reliable LLMs improve company reputation and enhance user trust in AI. Users are more likely to adopt AI systems when they trust AI.

Privacy Protection

Pythia protects customer data so developers can focus on the LLM performance without worrying about losing their data. This makes Pythia a trusted hallucination detection tool.

Contact us today to get started with Pythia and build reliable LLMs to speed up your research process and enhance user trust.